Here you can check some of the open projects I've completed in my free time. (Somewhat outdated, will update soon). For further details check my GitHub.

2024

Ayoub EL HOUDRI

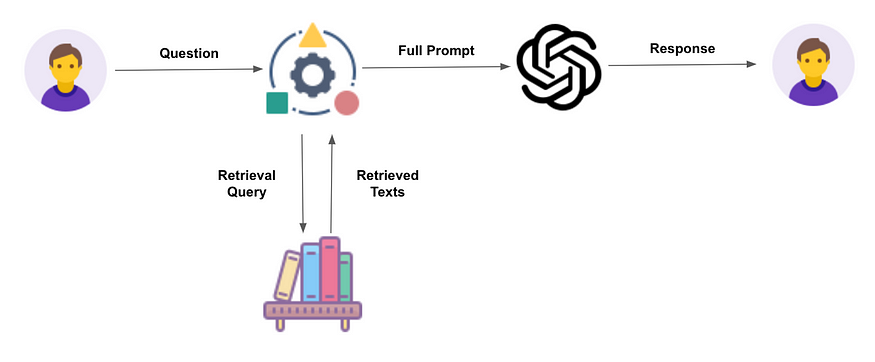

CookinAgent is a recipe retrieval system developed for fun using LangChain. The model is based on the OpenAI LLM API and the wttr API for weather information. To enhance performance and scalability, CookinAgent stores embedded recipe data in a ChromaDB vectorstore. This allows for efficient retrieval and manipulation of recipes, ensuring swift and accurate responses to user queries.

In addition, CookinAgent features a user-friendly interface built with Streamlit. This interface provides a way for users to interact with the agent, enabling them to input their location, and receive personalized recipe recommendations relative to the local ingredients and the current climate in the city.

[Code]

Ayoub EL HOUDRI

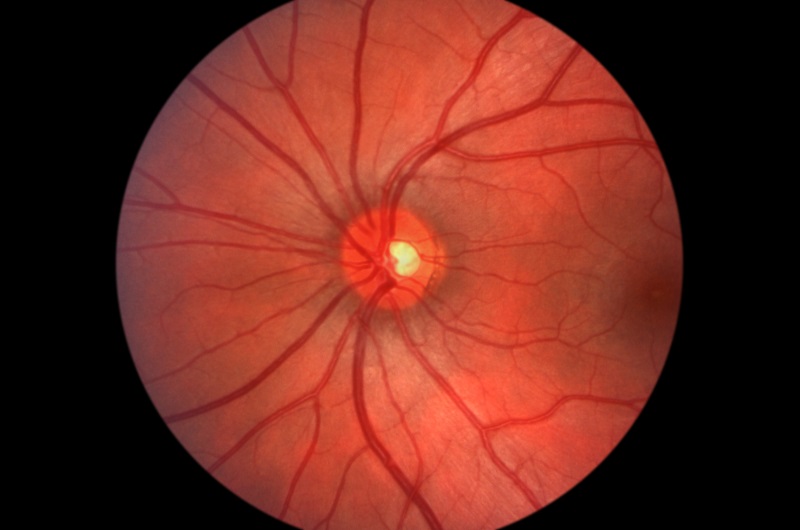

Glaucoma is a chronic eye disease that results in irreversible vision loss. The cup-to-disc ratio (CDR) is a crucial factor in the screening and diagnosis of glaucoma, making the accurate and automatic segmentation of the optic disc (OD) and optic cup (OC) from fundus images a critical task.

To address this, we present an open-source implementation of the MNet model using PyTorch. While there is no existing open implementation of this model, my goal is to provide a valuable resource for the medical field. This implementation of the MNet model is specifically designed for the task of OD and OC segmentation in fundus images, making it a useful tool for glaucoma screening and diagnosis.

[Article] [Code]

Ayoub EL HOUDRI

This project is a fun side project designed to forecast whether a player will continue their career in the NBA for the next five years. It operates on an XGBoost classifier model trained on various player statistics like points scored and shots attempted.

This model categorizes players into classes representing their likelihood of staying in the league. The predictor has been rigorously developed, trained, and tested before being integrated into a FastAPI application for easy demonstration and enjoyment purposes.

[Code]2023

Ayoub EL HOUDRI

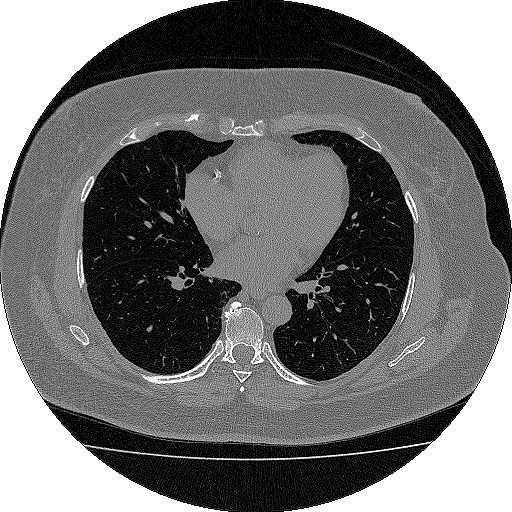

This internship project centers on the enhancement of lung segmentation, a critical process for identifying lung cancer. The primary objective is to refine the accuracy of identifying lung areas, especially in complex cases. Drawing inspiration from Wasserstein Generative Adversarial Networks (WGANs), we are adjusting our approach by fine-tuning how the system learns and incorporating existing knowledge about lung characteristics. By doing so, we aim to improve the precision and dependability of the lung identification process.

The significance of this research project lies in its potential impact on advancing lung cancer screening techniques. When we can accurately pinpoint the lung areas, it becomes easier to detect potential health issues. Our ultimate goal is to establish a solid framework for more effective and precise cancer screening, using advanced methods in medical imaging. By concentrating on enhancing this initial step, we hope to contribute to the development of improved strategies for diagnosing and treating lung cancer.

[Report]

Ayoub EL HOUDRI

This project was completed as part of the Challenge Data, which was managed by the Data team at ENS Paris and carried out in partnership with the Collège de France and the DataLab at Institut Louis Bachelier.

The project was held by Qube Research and Technologies and aimed to model the daily price variation of highly volatile electricity futures contracts in France and Germany.

The goal is to create a model that can provide a good estimation of the daily price variation in these two countries, despite the highly volatile nature of electricity futures contracts. The model was evaluated using the Spearman's correlation between the model's output and the actual daily price changes over a testing dataset sample.

Despite the complexity and volatility of the electricity futures market, the model achieved a high level of accuracy, demonstrating the effectiveness of incorporating multiple explanatory variables in explaining these highly volatile financial instruments.

Ayoub EL HOUDRI, Prof. Philippe GAUSSIER

To locate ourselves in a given environment, we use information of different natures which are merged to provide us with a coherent perception of the environment.

When a rat learns 2 different environments and then we connect these environments, we see a "remapping" of the activity of its place cells (similar to the disorientation that we can feel in certain environments). This remapping can be explained by the fact that once these 2 environments are connected, the places must be defined in a common referential.

Starting from the idea that a place can be defined by the conjunction of visual cues but also by path integration information from an arbitrary origin, we will study how the visual place / path integration association can be modeled to explain the remapping mechanisms observed and eventually allow the development of new recalibration methods for mobile robots or autonomous vehicles.

This work will also be useful to better understand how the hippocampus manufactures and maintains a coherent working memory over time.

[Article] [Code]2022

Ayoub EL HOUDRI, Dr. François MAUBLANC , Dr. Pierre ANDRY

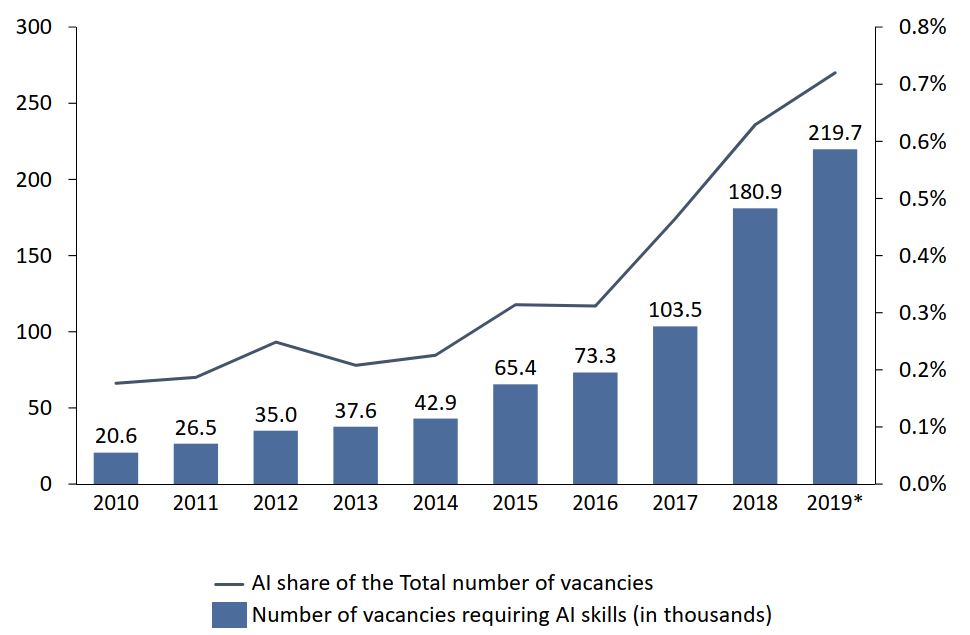

This project was held in partnership with the University of Bordeaux, the University of Nice Côte d'Azur and the CNRS. It aims to address the issue of the impact of AI on the labor market.

The study of this imact will be done using different ML-based techniques, starting by gathering a database on which models will be trained and evaluated then used to have results ready to be analyzed, commented and published.

The project is still in progress we are at the stage of gathering and processing data (financial indicators and information of companies specialized in AI) from the website Pappers. For further details on this first part of the project check the code below.

[Project Slides] [Code]

Ayoub EL HOUDRI

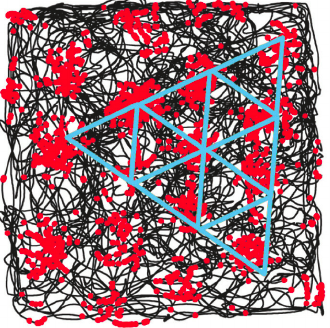

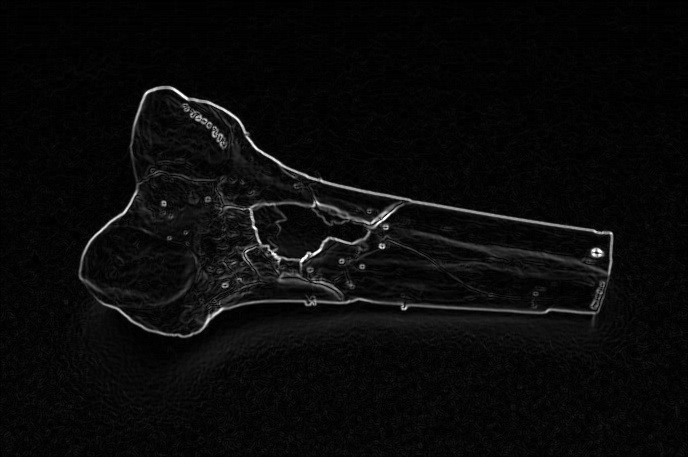

Edge detection is a type of image segmentation techniques which determines the presence of an edge or line in an image and outlines them in an appropriate way. The main purpose of edge detection is to simplify the image data in order to minimize the amount of data to be processed. Generally, an edge is defined as the boundary pixels that connect two separate regions with changing image amplitude attributes such as different constant luminance and tristimulus values in an image.

In this project, we present methods for edge segmentation of images; we used five techniques for this category: Sobel operator technique, Prewitt technique, Laplacian technique, Roberts technique and Canny technique, and they are compared with one another so as to choose the best technique for edge detection segment image. These techniques applied on one image to choose base guesses for segmentation or edge detection image.

[Project Slides] [Code]

Ayoub EL HOUDRI

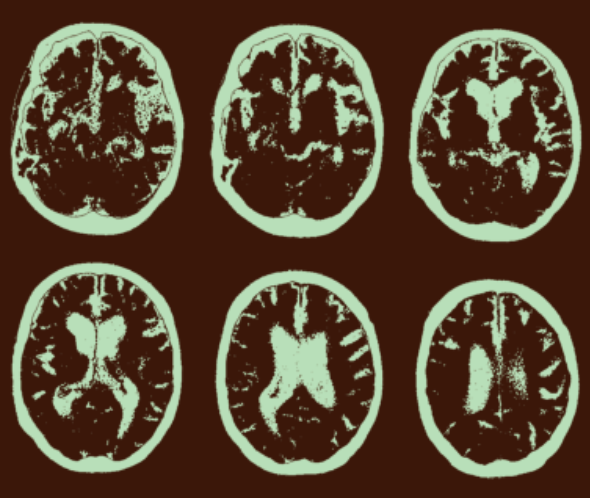

In brain MRI analysis, image segmentation is commonly used for measuring and visualizing the brain's anatomical structures, for analyzing brain changes, for delineating pathological regions, and for surgical planning and image-guided interventions.

It is one of the most time-consuming and challenging procedures in a clinical environment. Recently, a drastic increase in the number of brain disorders has been noted. This has indirectly led to an increased demand for automated brain segmentation solutions to assist medical experts in early diagnosis and treatment interventions.

K-Means clustering algorithm is an unsupervised algorithm and it is used to segment the interest area from the background. It clusters, or partitions the given data into K-clusters or parts based on the K-centroids.

The algorithm is used when you have unlabeled data(i.e. data without defined categories or groups). The goal is to find certain groups based on some kind of similarity in the data with the number of groups represented by K.

[Code]

Ayoub EL HOUDRI, Mlamali SAIDSALIMO

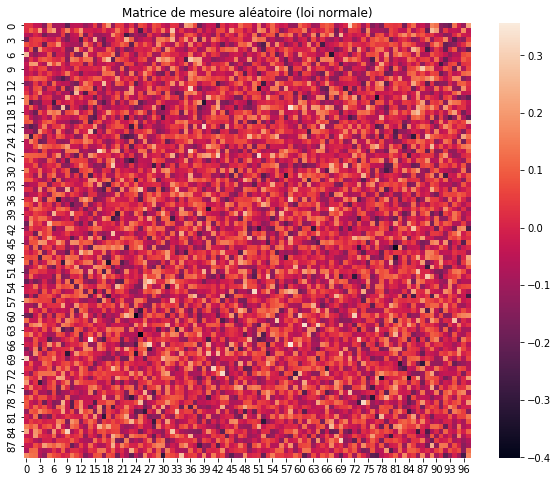

A common goal of the engineering field of signal processing is to reconstruct a signal from a series of sampling measurements. In general, this task is impossible because there is no way to reconstruct a signal during the times that the signal is not measured. Nevertheless, with prior knowledge or assumptions about the signal, it turns out to be possible to perfectly reconstruct a signal from a series of measurements (acquiring this series of measurements is called sampling). Over time, engineers have improved their understanding of which assumptions are practical and how they can be generalized.

An early breakthrough in signal processing was the Nyquist–Shannon sampling theorem. It states that if a real signal's highest frequency is less than half of the sampling rate, then the signal can be reconstructed perfectly by means of sinc interpolation. The main idea is that with prior knowledge about constraints on the signal's frequencies, fewer samples are needed to reconstruct the signal.

Around 2004, Emmanuel Candès, Justin Romberg, Terence Tao, and David Donoho proved that given knowledge about a signal's sparsity, the signal may be reconstructed with even fewer samples than the sampling theorem requires. This idea is the basis of compressed sensing.

[Report] [Code]

Ayoub EL HOUDRI

In computer graphics, color quantization or color image quantization is quantization applied to color spaces; it is a process that reduces the number of distinct colors used in an image, usually with the intention that the new image should be as visually similar as possible to the original image.

Computer algorithms to perform color quantization on bitmaps have been studied since the 1970s. Color quantization is useful for displaying images with many colors on devices that can only display a limited number of colors, usually due to memory limitations, and enables efficient compression of certain types of images.

[Code]

Ayoub EL HOUDRI

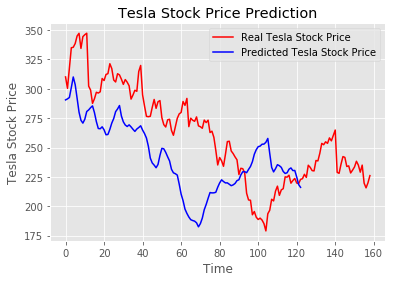

Trying to predict how the securities exchange will work is one of the most difficult tasks. There are so many variables involved with the expectation – physical elements versus psychological factors, rational and irrational behaviour, and so on.

All of these factors combine to make share costs unpredictable and difficult to predict with any degree of certainty. Is it possible to use AI to our advantage in this space ? AI approaches will potentially reveal examples and insights we hadn’t seen before, and these can be used to make unerringly exact expectations, using features like the most recent declarations of an organization, their quarterly income figures, and so on.

We will work with published information regarding a freely recorded organization’s stock costs in this report. We will use a combination of AI calculations to forecast a company’s future stock price with LSTM.

[Code]2021

Ayoub EL HOUDRI, Dr. Katharina EISSING

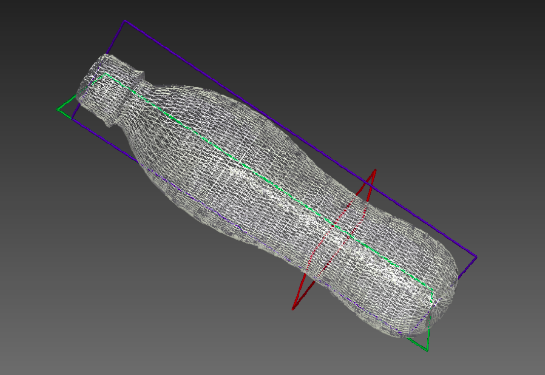

This project took place during my summer internship as a Data Science & Computer Vision Intern undertaken in the R&D department in Digimind Labs in Berlin in the fulfillment of my first year of engineering studies at CY Tech.

I was in charge of Shape reconstruction of plastic packaging using Computer Vision & Machine Learning algorithms as well as generating synthetic data using some of mesh parametric design tools and CAD manipulation libraries through Python.

For reasons of confidentiality the main code relating to this project cannot be published. Below you will find the report of this project that, after getting tyhe permission to make it public.

[Report]

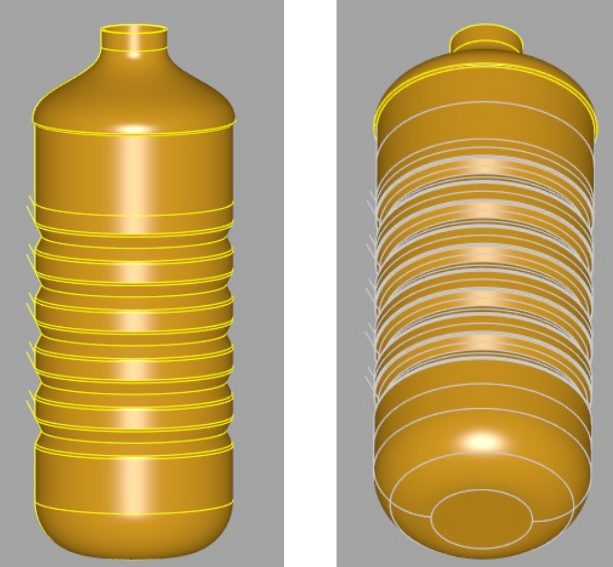

Ayoub EL HOUDRI, Dr. Katharian EISSING

This subproject took place during my summer internship, it aims to generate parametric data of designed 3D bottles in order to train the Pixel2Mesh algorithm on it, and adapt it to reconstruct 3D shapes of plastic bottles (STL format) using only a single RGB image of each bottle.

[Report] [Code]

Ayoub EL HOUDRI, Nawfel BACHA

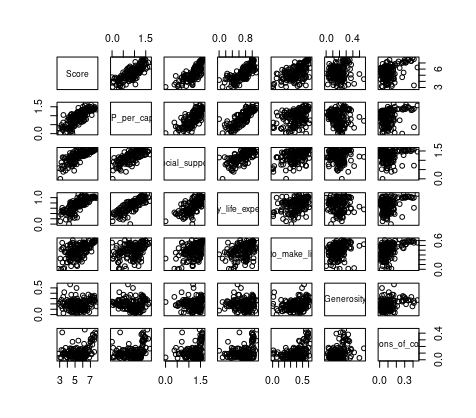

The World Happiness Report is a historical survey of the state of happiness in the world. The first report was published in 2012, the second in 2013, the third in 2015 and the fourth in the 2016 update. The World Happiness 2017, which ranks 155 countries according to their level of happiness, was published at the United Nations during an event celebrating the International Day of Happiness on March 20. The reports examine the state of happiness in the world today and show how the new science of happiness explains personal and national variations in happiness.

This project consists of setting up a multi linear regression model to explain the Score variable (happiness indicator in each country) according to 6 other explanatory variables: GDP_per_capita, Social_support, Healthy_life_expectancy, Freedom_to_make_life_coices, Generosity and Perception_of_corruption. As we go along, we will try to adjust the model by improving the quality of prediction while proposing a well-detailed error calculation at each step.

[Report] [Code]

Ayoub EL HOUDRI, Marine RASOLOFO

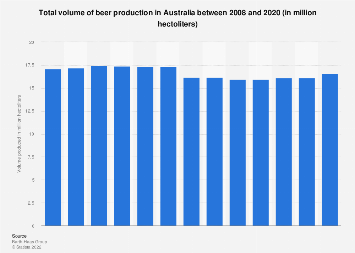

Australia’s craft beer industry is worth over AUD 800 million and grew 6.2 % from 2015 to 2020, while Australia’s beer manufacturing industry as a whole declined 1.8 % in the same time period.

Time series forecasting occurs when you make scientific predictions based on historical time stamped data. It involves building models through historical analysis and using them to make observations and drive future strategic decision-making.

In this project I used different time series forecasting methods to perform an accurate study of the evolution of beer production in Australia through time.

[Report] [Code]

Ayoub EL HOUDRI

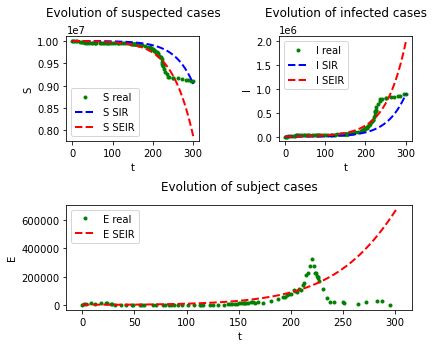

Compartmental models are a very general modelling technique. They are often applied to the mathematical modelling of infectious diseases. The population is assigned to compartments with labels – for example, S, I, or R (Susceptible, Infectious, or Recovered). People may progress between compartments. The order of the labels usually shows the flow patterns between the compartments; for example SEIS means susceptible, exposed, infectious, then susceptible again.

The models are most often run with ordinary differential equations (which are deterministic), but can also be used with a stochastic (random) framework, which is more realistic but much more complicated to analyze.

This project is an implementation of both SIR and SEIR models applied to the SARS-CoV-2 virus in order to predict things such as how the virus spreads, how many individuals are infected, and to estimate various epidemiological parameters such as the reproductive number. Such models can show how different public health interventions may affect the outcome of the epidemic, and what the most efficient technique is for issuing a limited number of vaccines in a given population.

[Code]